Mastodon Defence Command: The Scam Wave

It's not a sci-fi blockbuster, it's a serious issue.

The Online Safety Act was passed into UK law in October 2023. Its roll-out is a slow process which has been taking place behind the scenes for a long time. Only now are we seeing the effects as various pieces of the legislation are made active.

On paper, the OSA is a good thing. It brings in protection for people, children, adults, everyone, online.

But, as we so often see, paper is not the reality. Many are scared that the OSA will lead to over policing of online content, will prevent important current news stories being shared, will ban under 18's from receiving mental health support, hide LGBTQ+ stories, and much more.

You could say, "Well, that won't actually happen. They're not going to police that." But, it's not up to them to police it. It's the platforms and many platforms do not want to be persecuted. As a result, they will throw up the walls to protect themselves - not their users.

We're already seeing on X and Reddit that videos from Gaza are being blocked in the UK due to local laws. On Bluesky you must verify your age to use all the features of a Bluesky account.

Twitter has begun blocking videos of Israel’s atrocities in Gaza for users in the UK.

— Evolve Politics (@evolvepolitics) July 25, 2025

Twitter says the censorship is due to the UK’s new Online Safety Act, which mandates age verification for sensitive content.

However, Twitter does not allow users to manually verify their age. pic.twitter.com/E0C4UGqICE

The real issue here is the lack of clarity in the OSA. It is not easy to understand, even for the most clued up online service experts, and when it is it uses empty terms without offering any real guidance.

For example, many sites should have age verification if they could lead to children seeing harmful content (think porn, violent content, etc) but the age verification only needs to be "highly effective". There is no explanation of what that means or how to achieve it.

This empty, badly communicated, legislation has been taken advantage of by scammers. Especially on Mastodon.

The Scam

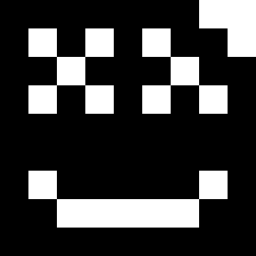

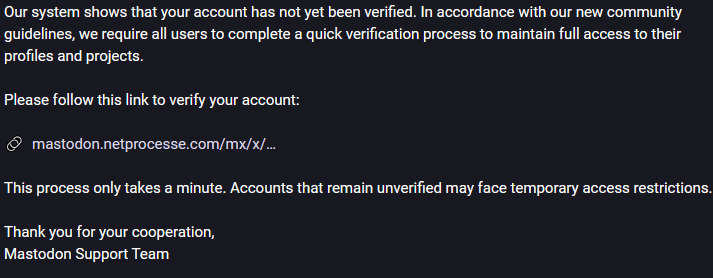

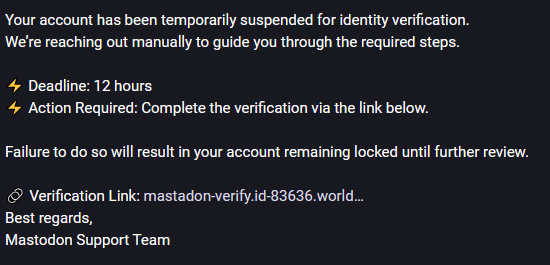

In a coordinated, and clearly planned, attack across Mastodon's side of the Fediverse, thousands of accounts began replying to posts claiming that users were violating rules and need to verify themselves.

The images below are taken from reported content received on indieweb.social.

You get the idea.

But what's striking about this is the organisation of the attack. The scammers waited until the OSA put age verification into force, recognised that Mastodon had not taken control of the situation, and knew exactly how they would reach out across the Fediverse to enact this plan.

They also made themselves look like Mastodon. The most believable of these accounts I saw was @mastodon_verification@mastodon.social.

In my opinion, one of the great weaknesses of Mastodon is their lack of go-to social presence.

Ironic, right? But where do you go to get official Mastodon news? Which account do you follow? Is it @mastodon@mastodon.social or is it @staff@mastodon.social or is it @MastodonEngineering@mastodon.social? Or, do you have to find a way to understand the GitHub and keep up to date that way?

This problem has left a hole and the scammers have filled it.

Even the (probably) official statement about the scam accounts from @staff@mastodon.social contradicts itself, claiming official accounts are verified from the joinmastodon.org domain (which this account is not) or will have a 'special role badge' - something which can't be seen across 90% of Mastodon servers.

If you're not on mastodon.social, then finding the accounts I mentioned above is, in itself, a challenge.

The Fix

Now, I am not saying this is Mastodon's fault. It is not. It is a failure of Ofcom and the OSA, their inability to define how social media sites can comply with their new rules and create a standard.

The OSA should be clearer on how "highly effective" age verification can be achieved and where it is needed.

Thankfully, this scam was quickly picked up by server admins (most of which are devoting free time to do this, say thank you to them!). The strange, patchy, web of server admin collaboration kicked into action and began the suspension of many offending accounts. Now, very few reports are coming in regarding these accounts.

But it should've been quicker. Mastodon's statement could've come sooner and it probably should've come from @mastodon@mastodon.social, not @staff@mastodon.social. Mastodon knew about the OSA and knew they would have to take action (I imagine it is something they are still discussing) but they should've made their position clear to users earlier, before deadlines passed and scammers stepped in.

It's so hard to get a message out across a decentralised network. It's so hard to apply something like the OSA to a decentralised network. But, whether we want to or not, we have to work this out for the future of the Open Social Web.

And we have to give server admins more support for what they do. Without them, the Fediverse you know and love would look incredibly different.